Properties of Sensory Channels

A Taxonomy, and Connections with User Interface Design

Summary

Regardless of what display technologies or other output

devices are used, a human ultimately perceives

information from a computer through the familiar

senses of sight, hearing, touch, etc.

This paper explores some of the basic properties

of the different sensory channels,

classifies them within a taxonomy,

and tries to find useful analogies in

user interfaces.

Finally, speculations on how aliens might sense their

environment and interact with each other are given.

The observations made offer a new way of thinking

about certain types of interaction.

Note that these observations are not

limited to just interaction between a human and computer,

but touch on more general kinds of interaction between

abstract entities.

Introduction and Motivation

At an anatomical level, there are many details that make each

sensory channel different. However, at a more abstract level,

some basic differences and similarities are apparent.

For example,

- The information available to us visually depends on the

direction we look in. Indeed, to find out if something

is even visible from our current position,

we must first look and scan in

all directions to see if it is anywhere in sight.

Hearing, on the other hand, can be done more passively,

since it does not matter which way our head or ears happen

to be oriented -- the sound around us remains perceptible.

(As a consequence, a source of light can sneak up behind us

without us noticing it, however a source of noise cannot.)

- Some sensory information, such as light and sound, can

travel a great distance from its source to our senses.

Other information, such as the faint smell of a flower

or the texture of a rough surface, must be actively

sought out:

We must physically displace ourselves in order to sense

the information.

- We can always tell where the source of a light is:

visual information implicitly carries with it information

about the direction it came from.

In contrast, a strong smell that fills the room

may have no obvious source.

It is also interesting to think about some of the physical

quirks of our senses. For example, if both light and sound are

waves, why is it that our eyes can form images from light waves,

but our ears cannot form images from sounds waves ?

Or, why is it that our eyes only sense 3 primary colours,

but our ears can sense thousands of different frequencies

of sound ?

Even more, why is it that we have 2 eyes, 2 ears, and 2 nostrils ?

What are the advantages of having pairs of sensory

organs, and are the reasons the same in all cases ?

Thinking about these and similar issues, I was motivated

to try and enumerate the basic, abstract properties of senses

and sensory information, and to create a taxonomy.

In so doing, I hope to

(i) find analogies between the

properties of sensory channels and

aspects of user interfaces that may help

inform the understanding and design of user interfaces and virtual worlds,

and (ii)

speculate on what possible sensory channels

may be relevant to alien beings on another planet,

and what the consequences might be on their way

of interacting with their environment, and perhaps even

what their computer output devices may be like.

Concepts and Terminology

|

|

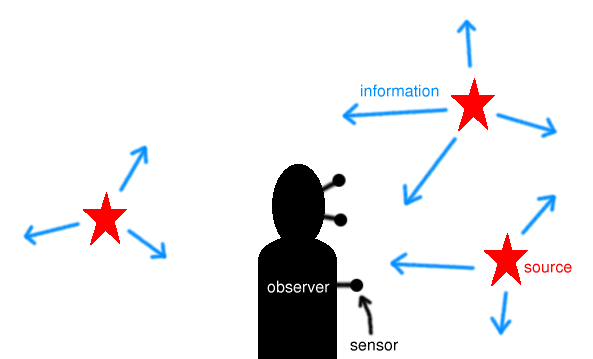

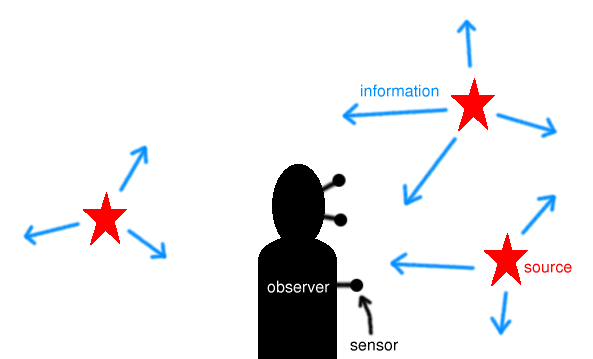

Figure 1.

Imagine an observer, equipped with sensors,

who goes out exploring a world full of sources of

information.

What are the different ways that the observer may acquire information ?

What different types of sensors might the observer be equipped with ?

|

|

|

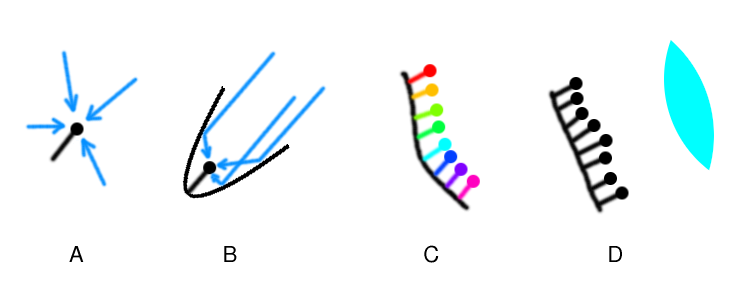

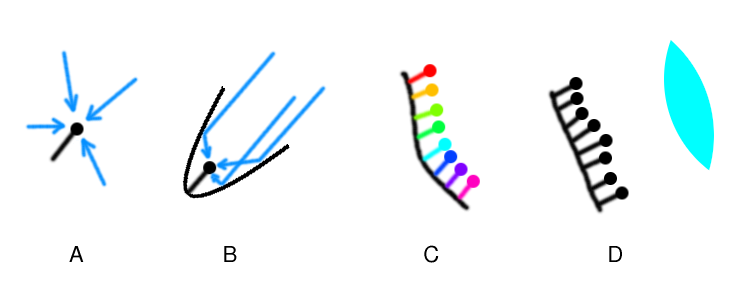

Figure 2.

Different possibilities for sensors.

A: an omnidirectional sensor that does not discriminate between

different directions.

B: a parabolic mirror enables the creation of a unidirectional

sensor that can be rotated to detect information

coming from a specific direction.

C: an array of sensors, each of which is "tuned" to a different

information component. (Examples of this arrangement of sensors

is in the human ear, where cilia in the cochlea are tuned

to detect different

frequency components of sound, and in the human nose, where

odours are detected by receptors tuned to various

different molecules.)

D: an array of sensors combined with a lens, enabling the formation

of an image. (Cones on the human retina work like this,

and in addition there are three different types of cones, tuned to

red, green, and blue frequencies of light respectively.)

It would also be possible to form an image using an array

of unidirectional sensors, such as the one in B, each

pointing in a slightly different direction.

|

Taxonomy of Sensory Channels

The below table is a taxonomy showing different properties

of information and sensors, and shows where the human senses

(and some other instruments) exist within the taxonomy.

Objections could be raised at some of the classifications,

and many details and possible extra dimensions have been omitted.

However, this taxonomy is proposed as a useful compromise

between excessive detail and uninformative simplicity.

The colour coding of the table gives an indication of the effort

involved in each sensory activity.

|

information is spatially localized (it can't travel far from its source) |

information travels or propagates across space 1 |

| information diffuses or meanders across space |

information travels in straight line (rectilinear propagation) 2 |

| sensors are omnidirectional (orientation of sensor doesn't matter) |

sensors are unidirectional (they detect information coming from a given direction) 3 |

| small number of sensor(s) |

large array of sensors that form an image |

| sensors cannot distinguish different components (e.g. frequencies) within the information |

cutaneous sensing of texture;

cutaneous sensing of temperature |

|

light meter;

noise meter;

ocelli (simple eyes) |

|

|

| different components (e.g. frequencies) can be filtered or independently detected in the information |

a small number of different components are sensed by different sensors |

|

|

|

|

human eye |

| sensors can be tuned to detect different components |

|

|

tunable radio receiver |

radio telescope |

|

| large array of sensors that each detect a different, fixed component (so an entire range of components is perceived simultaneously) |

human taste;

faint odours (e.g. scent of a flower);

faint sounds (e.g. whisper) |

human smell |

human ear |

"parabolic"-shaped ear;

echolocation in bats (?) |

|

| Colour Legend: |

|

Active sensing: To obtain information, the observer must use locomotion to displace herself (or an appendage, such as a finger or antenna). She must go to the information. |

|

Semi-active sensing: Although information flows to the observer, the observer may have to scan in different directions or across different components to find the information. |

|

Passive sensing: The information is ambient or pervasive, constantly flowing to the observer, who can perceive it (as if it were always in the background) without effort. |

| Footnotes (regarding the determination of direction

and distance to a source of information): |

| 1 |

In this case, an observer

equipped with a pair of sensors could detect the approximate

gradient, i.e. the

direction from which the information is coming.

This is one reason, for example, humans have two ears,

and may be the reason many animals have two nostrils

(although dogs are often seen waving their snout to and fro

when sniffing the air, suggesting how gradient

detection can done with a single sensor). |

| 2 |

In this case, if the information travels with a constant speed,

then an observer

equipped with three sensors could conceivably

estimate the

direction and distance

to a source of information.

Seismologists use this technique to determine the epicentre

of an earthquake.

It is also possible emit a pulse of information, and use

a single sensor to measure the time for the pulse to return,

to determine the distance to an

object. This principle is used in radar, sonar,

and many auto-focus cameras.

|

| 3 |

In this case, the direction

to a source is of course

implied by the orientation of the sensor.

Furthermore, an observer equipped with two sensors could use

triangulation

to estimate the distance to the source of

information.

Humans use their two eyes this way. |

Analogies in User Interfaces

The active-passive scale

Sullen et al. [sellen1992] identity five different dimensions for

characterizing feedback.

One of these is "demanding" versus "avoidable" feedback,

denoting whether the user can choose to monitor or ignore the feedback.

This is very similar to the active-passive scale

(see definitions of Active, Semi-Active, and Passive Sensing

in the colour legend)

used in the above taxonomy of sensory channels.

In this paper, however, rather than limiting our attention to feedback,

we wish to describe any information that may be "out there",

e.g. on a computer, and of potential interest to a user.

The active-passive (or demanding-avoidable) scale serves as a

measure of the effort required to retrieve a given

piece of information.

From a design perspective, we would like important

or frequently accessed information

to be retrievable with as little effort as possible.

We now consider different schemes for achieving this

within a user interface.

A good example of passively-sensed (or demanding, or ambient) information

is audio output. Sullen et al. [sellen1992] reference work by

Monk [monk1986] where "Monk argued that sound is a good choice for

system feedback in that users do not constantly look at the display

while working." [sellen1992, p. 142]

Indeed, as already indicated by our taxonomy,

auditory information from a computer

can be perceived without effort on the part of the user

(assuming the user has not become so habituated to the audio

output that she is simply ignoring it).

Baecker et al. agree, using the words "ubiquitous" and

"localized" [baecker1995, p. 526]

to describe audio and visual channels, respectively.

Another potential channel for delivering ambient information

is smell. Although there are practical problems,

devices that output different odours do exist

[kaye2001].

Some sensory channels which our taxonomy classifies as active

(i.e. requiring effort) can be made more passive

in a user interface setting

by virtue of the limitations of the output devices involved.

For example, if a computer is equipped with a small, low-resolution

screen, and the user has no reason to look away from the screen,

we may assume that the user can passively sense all information

on the screen without ever changing their general direction of gaze.

Similarly, if the user's hand never leaves a mouse, the user

can passively sense the kinesthetic feedback of pressing a mouse

button down without having to first move their finger to the button.

One scheme, then, for output of visual information that can be

sensed passively (or almost passively) is to make the information

occupy a large area of the screen, so it is visible wherever

the user looks.

This scheme does not preclude the display of other information.

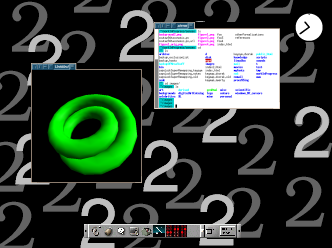

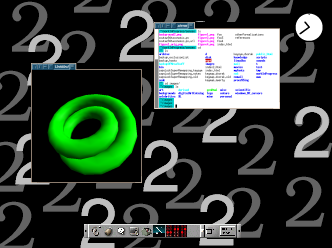

An anecdotal example helps explain this.

Users of window managers with multiple virtual desktops

can often keep track of the currently active desktop using a small,

zoomed-out "map" of all desktops. This map is typically

located in the corner of the screen.

I once encountered a user who, to avoid having to glance at such

a map, had configured his system to change the background image

whenever he changed desktops. The background images were chosen

to make it easy to see which desktop he was currently in

(Figure 3), wherever his attention happened to be focused on the screen.

|

|

Figure 3.

A screenshot showing a background "wallpaper" image that

reminds the user they are in virtual desktop #2.

Wherever the user happens to look, the numeral 2 is

easily perceived in the background image, which

creates a kind of ambient (or passively sensed) information.

|

Sellen et al. [sellen1992] used a similar strategy to try and make

visual feedback as salient as possible.

In their studies of mode errors, the visual feedback used to

indicate a mode change was a change in the background colour

of the entire screen.

Another possibility for making visual information easily perceived

is to place it at a common focal point of the user, e.g. the

mouse cursor, so it is obtrusive and unlikely to go unnoticed.

Many user interfaces exist which change the mouse cursor depending

on the system's current mode.

McGuffin et al. [mcguffin2002b] describe the design of an interaction

technique in which a slider widget temporarily becomes

attached to the mouse cursor. They suggest that this creates

"visual tension" [mcguffin2002b, p. 41],

analogous to kinesthetic tension [buxton1986, sellen1992],

which reminds the user of what is occurring.

As we move along the scale from passive sensing to active sensing,

we encounter the notion of displaying information in a corner of the

screen or in a status bar. Although such information is always

visible, the user must make a (small) effort to look at it, and may

easily forget or otherwise fail to do so.

In keeping with the taxonomy of sensory channels, we may say

that such information is sensed semi-actively.

This partly motivates the idea of using gaze as a channel

for input

[ware????,zhai????,vertegaal2002]:

it arguably requires less effort to "point"

with one's eyes than with one's hand or finger.

Indeed, Argyle and Cook point out that

gaze "must be treated both as a channel and a signal" [argyle1976, p. xi].

Finally, although a user sitting at a desktop computer need

not displace their body very much, they nonetheless do

navigate and travel through virtual worlds,

which motivates the notion of active sensing

on a computer. Any time a user must, for example,

click on a button to bring up information, or scroll to a desired area,

or fly through a virtual world to find something,

they are actively retrieving information. This is analogous

to an observer who actively goes out into their environment to acquire

tactile information.

An interesting scheme for making such navigation easier was suggested

by Pierce et al. [pierce1999]. In 3D virtual worlds, the user often

needs to "look around" their point of view to orient themselves.

This is relatively easy and natural to do in the real world, however,

in many interfaces for navigating 3D, this can be cumbersome.

Pierce et al. introduced the notion of "glances" for better

supporting panning in a virtual 3D world: the user can quickly

look away from their current direction,

and when they are done glancing in the new direction,

their view snaps back to the previous direction.

In summary, we have seen how the active-passive scale for

classifying sensory channels has direct analogies within

user interfaces.

Speculations on Extra-terrestrial Life

It is interesting to imagine alien beings in alien environments

whose sensory organs are variations on human senses, or that

fill in some of the blanks in the taxonomy.

This exercise can lead to thought experiments akin to

Thomas Nagel's oft cited "What Is It Like to Be a Bat ?"

[nagel1974] [dennett1991, pp. 441--448]

(see Dawkins [dawkins1986, Chapter 2: Good Design]

for a description of the auditory

system of bats, and how they can "see" with sound, in some sense).

Many of us are taught from an early age about the 3 primary

colours of light. Mixing together these 3 colours allows any

visible colour to be synthesized.

What is perhaps not as emphasized, however,

is that these 3 primary colours are merely an artifact of human

biology: the colour associated with a given distribution of light

is determined by the excitation levels of the 3 types of cones

in our retina. These cones effectively sample a continuous

distribution at only 3 points: red, green, and blue.

Alien beings with 5 different kinds of cones, for examples,

would be able to see a richer range of colours, and could

perceive differences between colours that are indistinguishable

to a human.

Even more difficult to imagine is an alien capable of

directly sensing hundreds of different frequencies of light

(much like how the cilia in a human's inner ear are tuned

to hundreds of different frequencies of sound).

Would such beings experience light as a kind of music,

with rich melodic and tonal qualities ?

Would such beings "sing" luminous songs with special organs ?

Another bizarre possibility is that some aliens may have

sensory organs which can be tuned to detect different narrow

bands of frequencies, not unlike how a radio receiver is tuned.

If an alien species had, for example, tunable acoustic sensors

("ears"), and could also tune the pitch of their voice

to fall within one of many corresponding bands of voice,

it might be possible for a room full of such aliens to

carry on multiple, simultaneous conversations, without

separate conversational groups being audible to each other.

(This is akin to how many conversations can take place over

radio waves all at once, but without interfering with each

other because they take place on different frequency bands.)

One could even imagine two aliens who are carrying on a

private conversation within a secret frequency band, and

a third "spy" alien who sees their mouths moving, and decides

to try scanning different audio frequencies with her

ears until she finds the right band for eavesdropping.

This example shows how communication can involve multiple,

interacting sensory channels:

although the first two aliens tried to keep their conversation

private by using a secret audio band, they did not hide

the visual cue of their moving mouths from the

eyes of the third alien.

Gaze and mutual gaze in aliens

In humans, eyes serve both to take in visual information

from the environment, and also to send signals out to

others. In the very act of gazing somewhere, we cannot

help but reveal (to anyone looking at us) where we are looking

(unless we are wearing sunglasses).

We are all familiar with the social

interaction this can result in, e.g. when we "catch"

someone else staring at us, we may feel

threatened, challenged, flattered, etc. -- and we quickly

decide if we should look away or return the stare.

It is interesting that humans are one of

the few primates with a dark pupil surrounded by a white

sclera; this may be to enhance the visibility of our direction

of gaze [kobayashi2001a,kobayashi2001b].

The state of mutual gaze, or eye contact, between two people

is particularly interesting. Not only do the two individuals

become aware of each other's gaze, they are also aware that

each other is aware of this.

We might even be tempted to suppose that they are each

aware of the fact that each other is aware of this latter

statement, ad infinitum. Of course, such an infinite sequence

of deductions can't really be what

happens in our mind. Somehow, it seems sufficient that the

two parties involved be aware that their knowledge of the

situation is shared.

In any case, mutual gaze is a psychologically significant

situation: we know that one effect of being looked at

is heightened arousal [argyle1965][vertegaal2002].

It has also been of interest more generally:

the famous philosopher Jean-Paul Sartre [sartre1943] regarded

mutual gaze "as the key to `inter-subjectivity'" [argyle1976, p. x].

It is probably true that mutual gaze constitutes a kind

of "strange loop" or "tangled hierarchy", as defined by Hofstadter [hofstadter1979].

When one eye looks at another eye, the former becomes a meta-eye:

an eye that sees other eyes, like a sentence talking about

other sentences, or a vacuum cleaner that cleans other vacuum cleaners.

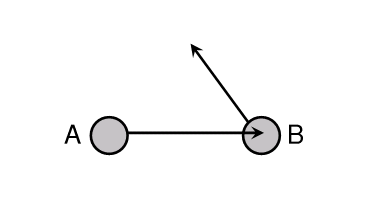

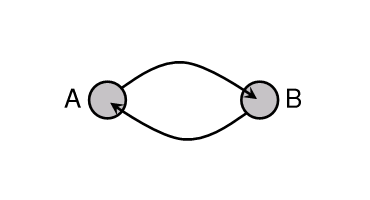

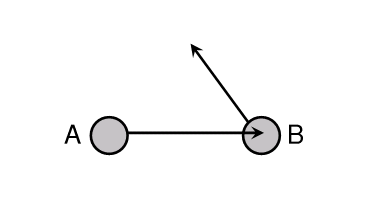

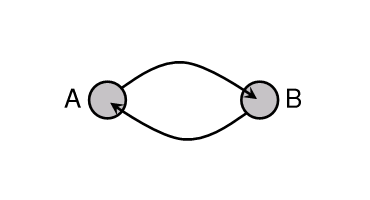

We can represent gaze abstractly using arrows.

Figure 4 shows one individual gazing at another,

and Figure 5 shows two individuals engaged in mutual gaze.

It is easiest to think about these figures in terms of

the human visual channel, however the notion of gaze can

be generalized to other sensory channels.

Imagine an alien species with a sensory organ that can be somehow adjusted

(either in orientation, or tuning, or otherwise)

to perceive different information,

and that this sensory organ reveals the state of its own adjustment,

emitting information about itself that can be perceived by other

individuals.

In the case of humans, the eyes are adjusted (in orientation)

to perceive information coming from different directions,

and the eyes themself emit a (visual) signal of where they are looking.

By analogy, one can imagine an alien whose ears can be tuned

to a given frequency band, and that may also (due to

evolution?) give off a sound

of a specific frequency to signal their current tuning.

Two such aliens can be engaged in mutual "acoustic" gaze,

by simultaneously perceiving each other's tuning.

Thus, in the notation used in Figures 4 and 5, if an arrowhead

touches the sensory organ of individual X, this means that the

originator Y of the arrow can perceive the tuning/orientation/adjustment

of X's sensory organ.

|

|

Figure 4.

A can see where B is looking, but B cannot see A.

Furthermore, A knows that B is oblivious to A's gaze.

If asked to draw a diagram representing this situation,

only A would be able to draw the above diagram.

|

|

|

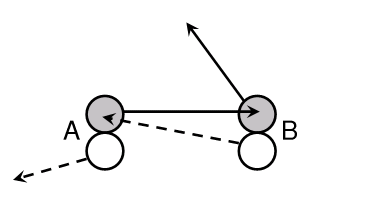

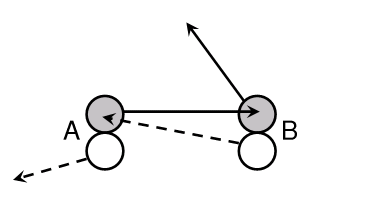

Figure 5.

Mutual gaze, or eye contact, as it occurs (for example) in humans.

A can see that B is looking at A, and B can see that A is looking at B.

Their knowledge of the situation is symmetric,

and furthermore A and B each know that their knowledge

is shared.

If asked to draw a diagram representing this situation,

A and B would each be able to draw the above diagram.

|

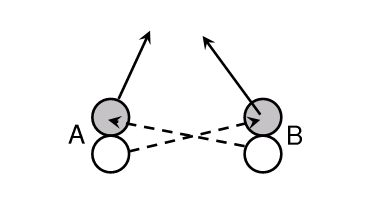

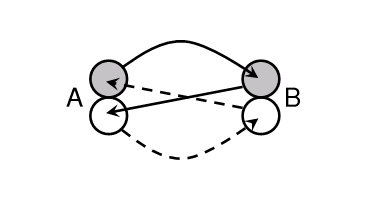

Things become more interesting if we allow for a second sensory

channel to play a role in gaze.

In Figures 6, 7, and 8, each individual is equipped with a second

sensory organ which senses information through a second channel

(dashed lines). We might imagine that the first and second channels

are visual and acoustic (e.g. perhaps the aliens emit a sound whose

frequency indicates the orientation of their eyes),

or perhaps both channels are visual, with one involving "visible"

light and the other infrared light.

|

|

Figure 6.

A can see where B is looking.

However, unbeknownst to A, B can also sense where A is

looking, through a second sensory channel

(represented by dashes).

So B knows that A knows where B is looking,

but A does not know this.

|

|

|

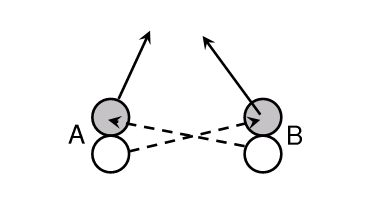

Figure 7.

Quasi-mutual gaze. Through a second sensory

channel (represented by dashes),

A and B can each sense where the other

is looking. However, neither A nor B are

aware that the other can sense where they are

looking. Hence, although the situation is symmetric,

and the knowledge that A and B each have of the

situation is symmetric, neither of them would be

able to draw the above diagram if asked to.

Each of A and B thinks that they are covertly spying

on the other.

It would seem, then, that none of the normal phenomena

associated with mutual gaze (e.g. signalling a threat,

dominance, interest, or love) could occur in this

situation.

(Note that the quasi-mutual gaze represented here is

somewhat akin to two humans looking at each other

through sunglasses, neither of them realizing that their

stares are being returned. Of course, this would only

involve one sensory channel, whereas in the diagram

we have two channels.)

|

|

|

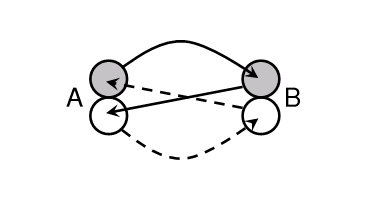

Figure 8.

Mutual "gaze" involving two sensory channels.

This is an elaborate situation where A and B each

use their two sensory organs to gain awareness of

the other's two sensory organs.

Each of A and B can deduce symmetric knowledge of

the situation, and also deduce that this knowledge is shared.

If asked to draw a diagram of the situation, each of

A and B would be able to draw the above diagram.

|

Finally, we may note that all the scenarios of mutual gaze

considered so far have involved only two individuals.

Of course, if the "gaze" is done using unidirectional

sensors, such as the human eye, then a "line of sight"

is necessarily involved, limiting mutual staring to dyadic groups.

However, were a different, omnidirectional channel involved,

it could be possible for N parties to be locked

in a mutual, shared, and simultaneous sensing of each other.

I leave it to the reader to wonder what kind of social

interaction such N-way mutual gaze could lead to.

Acknowledgements

Thanks to Brian Wong for providing some initial reactions

to this work.

References

@book{argyle1976,

author = {Michael Argyle and Mark Cook},

title = {Gaze and mutual gaze},

year = 1976,

publisher = {Cambridge University Press}

}

@article{argyle1965,

author = {Michael Argyle and J. Dean},

title = {Eye-contact, Distance and Affiliation},

journal = {Sociometry},

year = 1965,

volume = 28,

pages = {289--304}

}

@book{baecker1995,

author = {Ronald M. Baecker and Jonathan Grudin and William A. S. Buxton and

Saul Greenberg},

title = {Human-Computer Interaction: Toward the Year 2000},

year = 1995,

publisher = {Morgan Kaufmann},

edition = {2nd}

}

@inproceedings{buxton1986,

author = {William A. S. Buxton},

title = {Chunking and phrasing and the design of human-computer

dialogues},

booktitle = {Information Processing '86, Proceedings of the IFIP

10th World Computer Congress},

year = 1986,

pages = {475--480}

}

@book{dawkins1986,

author = {Richard Dawkins},

title = {The Blind Watchmaker},

year = 1986,

publisher = {Longman Scientific and Technical}

}

@book{dennett1991,

author = {Daniel C. Dennett},

title = {Consciousness Explained},

year = 1991,

publisher = {Little, Brown and Company}

}

@book{hofstadter1979,

author = {Douglas R. Hofstadter},

title = {G\"odel, Escher, Bach: An Eternal Golden Braid},

year = 1979,

publisher = {Vintage Books}

}

@mastersthesis{kaye2001,

author = {Joseph N. Kaye},

title = {Symbolic Olfactory Display},

school = {Massachusetts Institute of Technology},

year = 2001,

note = {http://web.media.mit.edu/\~jofish/thesis/}

}

% http://www.saga-jp.org/coe_abst/kobayashi.htm

@article{kobayashi2001a,

author = {Hiromi KOBAYASHI and KOHSHIMA},

title = {Unique morphology of the human eye and its adaptive

meaning: Comparative studies on external morphology

of the primate eye},

year = 2001,

journal = {Journal of Human Evolution},

volume = 40,

pages = {419--435}

}

% http://www.saga-jp.org/coe_abst/kobayashi.htm

@inbook{kobayashi2001b,

author = {Hiromi KOBAYASHI and KOHSHIMA},

title = {Primate Origin of Human Cognition and Behavior},

editor = {T. MATSUZAWA},

chapter = {Evolution of the human eye as a device for communication},

pages = {383--401},

publisher = {Springer-Verlag, Tokyo}

}

@inproceedings{mcguffin2002b,

author = "Michael McGuffin and Nicolas Burtnyk and Gordon Kurtenbach",

title = "{{FaST} Sliders: Integrating {Marking Menus}

and the Adjustment of Continuous Values}",

booktitle = "Proceedings of Graphics Interface 2002",

year = 2002,

month = "May",

location = "Calgary, Alberta",

pages = "35--41"

}

@article{monk1986,

author = {A. Monk},

title = {Mode errors: A user-centred analysis and some preventative

measures using keying-contingent sound},

year = 1986,

journal = {International Journal of Man-Machine Studies},

volume = 24,

pages = {313-327}

}

@article{nagel1974,

author = {Thomas Nagel},

title = {What Is It Like to Be a Bat?},

journal = {Philosophical Review},

year = 1974,

volume = 83,

pages = {435--450}

}

@inproceedings{pierce1999,

author = {Jeffrey S. Pierce and Matt Conway and Maarten Van Dantzich

and George Robertson},

title = {Toolspaces and Glances: Storing, Accessing,

and Retrieving Objects in 3D Desktop Applications},

booktitle = {Proceedings of ACM I3D'99

Symposium on Interactive 3D Graphics},

year = 1999,

pages = {163--168}

}

@book{sartre1943,

author = {Jean-Paul Sartre},

title = {L'Etre et le N\'eant},

year = 1943,

note = {Translated by H. Barnes, Being and Nothingness}

}

@article{sellen1992,

author = {Abigail J. Sellen and Gordon P. Kurtenbach

and William A. S. Buxton},

title = {The Prevention of Mode Errors Through Sensory Feedback},

year = 1992,

journal = {Human Computer Interaction},

volume = 7,

number = 2,

pages = {141--164}

}

@inproceedings{vertegaal2002,

author = {Roel Vertegaal and Yaping Ding},

title = {Explaining Effects of Eye Gaze on Mediated Group

Conversations: Amount or Synchronization?},

booktitle = {Proceedings of ACM CSCW 2002 Conference on

Computer Supported Collaborative Work},

year = 2002,

publisher = {ACM Press},

location = {New Orleans}

}

Copyright ( C ) Michael McGuffin, 2002

Written between July 31 and August 9, 2002

Based on ideas developed in 1999

Minor updates performed Jan 11, 2003

Minor update performed Mar 12, 2003